The MVP is central to processes like the lean start-up, taking the iterative approach of build-measure-learn. But not everything is an MVP, sometimes not even the ones that are intended to be one. Overlooked is often that the MVP is not an end in itself but merely a means to an end. The MVP is not the deliverable; the deliverable is the learning from putting the MVP to the test. The MVP helps to find out what you actually need to build.

As a corporate innovation lab we have to keep busy, preferably without any accountability. And everybody knows that 9 out of 10 innovations fail, which also means that only in 1 out of 10 cases we need get the full attention of the mother ship. Best would be to simply build and test MVPs, keeping us busy, keeping moving. How?

- Build a technical prototype. It is much easier to fiddle with technology than it is to understand business opportunities and user needs. Also, it is much more fun, so let’s focus on technology. It keeps you busy. Naturally, testing it will fail user acceptance, but that is all part of the MVP story, ergo expected, so we just move on to the next technological challenge. We can go on forever.

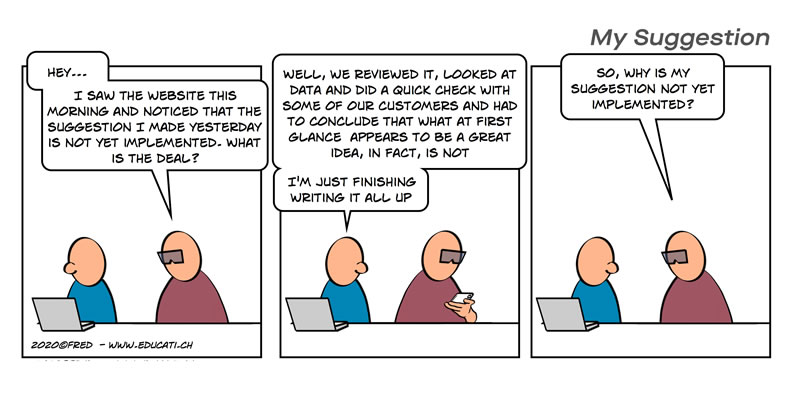

- Let’s keep busy fixing the prototype, and not acting on what is learned. Like with a prototype, you may have to throw away the MPV after you’ve learned what you set-out to learn. But like with a prototype, and especially with digital prototypes, we seldom do. We fall in love with it and try to make it work even if the data indicates it does not.

- Don’t worry about errors. It is an MVP, errors are expected. Yes, we are professionals and aim to deliver good work, so we spend a lot of time to make it sound and then resist making changes when we learn it needs to be different. And then when errors do occur, the often heard argument is ‘It is just an MVP‘. True, but if errors block the exact purpose for which the MVP was build, something went wrong.

- Pretend to be busy. Building an MVP and putting it to the test shows ‘movement’, which is easy currency to keep stakeholders at bay at least for some time, whether it actually drives progress or not.

- We are always learning. You need to know what you want to learn and make sure you are exactly testing for that. Defining what to test for and how to test often requires a lot of time and effort. The easy shortcut is to accept that ‘We are just testing’ therefore, we do not need to really think it through. Just try and let the users decide, see if something sticks. This is not a modern challenge, as the following snippet from Richard Feynman‘s lecture “III – This Unscientific Age” [“The Meaning of it all“, 1963] illustrates:

Another thing is the Ranger program. I get sick when I read in the paper about, one after the other, five of them that don’t work. And each time we learn something, and then we don’t continue the program. We’re learning an awful lot. We’re learning that somebody forgot to close a valve, that somebody let sand into another part of the instrument. Sometimes we learn something, but most of the time we learn only that there’s something the matter with our industry, our engineers and our scientists, that the failure of our program, to fail so many times, has no reasonable and simple explanation. [….] It’s not worthwhile knowing that we’re always learning something.

Instead of throwing at the wall and seeing if something sticks, define what you need to test

It is not enough to just build something, an MVP, so that you can just test and see if something sticks. Sometimes this approach works. Maybe when you have absolutely now idea where and or how to start. Just try many things and if you find it moved the needed, you try to find out why and how. But typically, to learn you need to have a test with a purpose. You need your assumptions or hypothesis and metrics.

Simply build-measure-learn, a bit more elaborated

- Vision: Figure out where you want to go with your product or business.

- Roadmap: Figure out what is the next important obstacle to get there. Important here is to identify based on what observation (data, success criteria) you know the obstacle is removed. For example, if you are not sure whether your customers are prepared to pay the estimated street value for your service, you can for example measure the percentage of customers that actually pay or committed to pay.

- Test with an MVP: Figure out what is the simplest way to get the insights needed to resolve the obstacle and build/test that. For example, to test whether customers are willing to pay, you can create a possibility to sign-up for the service which sufficiently indicates a serious intend. So something in-between a non-committal “yes, tell me more” and a for both parties binding “pay here” process.

- Learn: Based on the test results formulate your insights;

- check whether whatever you built actually helped you to learn what you wanted; what did you learn and how sure are you about the findings? Where there any biases?

- review how the findings changed your insights about what is the biggest or most important obstacle. Is it resolved? Is there a different obstacle instead?

- review how the findings impacted your view on the vision; is the vision still valid given the new insights?

- Do again: Go back to 1 until you meet the criteria the enter the next phase of your development life-cycle. Naturally, although you have to check, in most cases the vision and roadmaps remain unchanged and you hop from MVP to MVP.

Basically the build-measure-learn process; define what you need to build to get the insights needed and build jus that, define what you need to measure and measure that. Critical to understand is that every step is aimed at learning something. You have to define what that is, and what is the minimal (optimal) to create and do to achieve the learning.